I’m sure you’ve all heard of Big Data. It refers to the astronomical amount of data available these days on the Web. The engineers in charge of this project are developing ever more efficient systems to be able to process and store this data efficiently.

Today, data can reach several Petabytes or exabytes, which is no longer exceptional. Developers have software platforms available for this purpose. This allows complex IT operations to be carried out successfully to share them on different nodes.

For this, Apache Hadoop software is widely appreciated. This framework serves as the basis for Big Data.

What is Apache Hadoop?

The non-profit Apache Software Foundation supports the free open source Apache Hadoop project, but commercial versions have become very common.

The Hadoop cluster improves file storage capacity and redundancy by distributing data across multiple servers.

Different variants of this framework are available today. Indeed, if you go to Cloudera for example, there is a Hadoop instance called “Enterprise ready.”

This instance is open-source. Many similar products are also available from other vendors such as Hortonworks and Teradata.

Hadoop structure: construction and essential elements

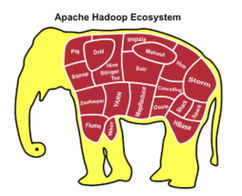

When we talk about Hadoop, we are often talking about the whole ecosystem of the software in question. This framework has many extensions like Pig, Chkwa and many others. This makes it possible to work on huge amounts of data. Apache Software Foundation supports all these projects.

What we call Core Hadoop is the basis of this ecosystem just as Debian code is the basis of the Debian ecosystem. These elements are present in all basic versions of Hadoop Common, Hadoop Distributed File System (HDFS) and MapReduce Engine.

Hadoop Common

The Hadoop Common package is considered as the core/base of the framework. It provides essential services and necessary processes such as abstraction of the underlying operating system and its file system. Hadoop Common also contains the Java Archive (JAR) files and scripts needed to start Hadoop. The Hadoop Common package also provides source code and documentation, as well as a contribution section that includes various projects from the Hadoop community.

Hadoop Distributed File System (HDFS)

HDFS has many similarities with other distributed file systems but is different in several ways. A notable difference is the HDFS (write-once-read-many) model, which relaxes the control requirements of competing accesses, simplifies data consistency and enables high-speed access.

Another unique attribute of HDFS is the view that it is generally better to locate the processing logic close to the data than to move the data to the application space.

You can access HDFS in different ways. HDFS provides a native Java application programming interface (API) ™ and a native C language wrapper for the Java API. Besides, you can use a web browser to browse HDFS files.

HDFS is composed of groups of interconnected nodes where files and directories reside. An HDFS cluster consists of a single node, called NameNode, which manages the file system namespace and regulates client access to files. Also, data nodes ( DataNodes) store data as blocks in files.

MapReduce Engine

In addition to HDFS, MapReduce is an integral part of Hadoop. Tools such as Pig and Hive are built on the MapReduce engine, so to learn these tools, it is essential to learn MapReduce first.

MapReduce jobs are written in Java. Developers with Java skills will be able to understand MapReduce faster than developers with other languages. The MapReduce job submission steps involve: validating the input and output specifications of the MapReduce job, calculating the input divisions for the MapReduce job.

Since the release of version 2.3 of Hadoop, MapReduce has been reworked. Thanks to this, the framework now offers many additional features.

Choosing the proper web hosting solution

When selecting a Hadoop distribution, one of the most crucial questions to ask yourself is whether you want a solution that is hosted on your own premises or in the cloud. An on-site solution still, in theory, guarantees the maximum level of security, even if there is no space for compromise when it comes to preserving complete control and ownership of your data. This is the case when there is no room for compromise. Cloud computing solutions, on the other hand, have seen significant cost reductions, more flexibility, and simplified scalability in recent years.

The vast majority of these vendor products are capable of being set up either in the cloud or on the customer’s own premises. However, there are some that can’t be done locally. These are typically products offered by web service providers like Amazon or Microsoft, which run either Hadoop distributions offered by other, platform-focused vendors like Hortonworks or MapR, or the providers’ own distributions. Amazon and Microsoft both provide these solutions.

If you want to learn more about coding and website hosting head over to Meilleurs Hébergeurs Web Pas Cher en 2018 – Low Cost.